Computers today are fast, precise, and powerful. My desktop at home runs Red Dead Redemption II smooth as butter while also streaming Japanese music from YouTube and illegally downloading old episodes of Star Trek. Why would we even want to replace these machines?

In contrast with the brain, CMOS devices (upon which all digital devices are based) are high power, high precision and make use of high frequency internal clocks. As discussed previously, the brain is on the opposite end of the spectrum on all of these aspects.

Another fascinating feature of the brain is its plasticity- it is what allows us to learn new languages and skills with ease and is responsible for muscle memory. Neuroplasticity is great!

According to an August 2024 Nature paper, current deep learning algorithms have been shown to lose plasticity, they “get rid” of neurons and synapses in their neural networks. [1]

Modern neural nets used for AI, sound or image recognition have hundreds of millions of synaptic weights that need to repeatedly be accessed and used in processing. Synaptic weights in software neural networks take on integer values and are stored in the memory of a computer. The storage of an integer requires multiple bits, and the weights must be transported to where they are processed, not only increasing energy usage, but physically limiting computational speed. This is the so called von Neumann bottleneck, and is one limitation of modern computing that low power computing seeks to remedy.

Current CMOS neurons require about 1000 transistors each, and neural nets need to be made of millions of these neurons. The number of these neurons that can be fit onto a single chip is a limiting factor in CMOS based neural networks. [2]

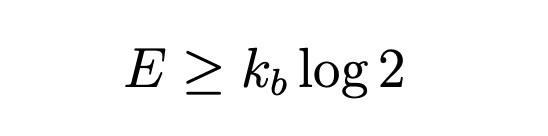

The theoretical minimum amount of energy used to erase a single bit of information is called the Landauer Limit and is calculated:

kb is the Boltzmann constant, and the base of the logarithm is e. This is the only math in this whole series, I swear.

Modern computer architecture and hardware operate millions of times above this limit, suggesting engineering and theoretical solutions can reduce the energy cost of computing. There are already results of existing neuromorphic hardware cutting energy costs in some applications. [3]

In the next post, I’ll discuss the history of the memristor, a device first theorized in the 1970’s, that can be used to simulate synapses.

[1] Dohare, Shibhansh, J. Fernando Hernandez-Garcia, Qingfeng Lan, Parash Rahman, A. Rupam Mahmood, and Richard S. Sutton. 2024. “Loss of Plasticity in Deep Continual Learning.” Nature 632 (8026): 768–74. https://doi.org/10.1038/s41586-024-07711-7.

[2] Marković, Danijela, Alice Mizrahi, Damien Querlioz, and Julie Grollier. 2020. “Physics for Neuromorphic Computing.” Nature Reviews Physics 2 (9): 499–510. https://doi.org/10.1038/s42254-020-0208-2.

[3] “A Nonvolatile Associative Memory-Based Context-Driven Search Engine Using 90 Nm CMOS/MTJ-Hybrid Logic-in-Memory Architecture.” n.d. ResearchGate. Accessed December 11, 2024. https://www.researchgate.net/publication/264705907_A_Nonvolatile_Associative_Memory-Based_Context-Driven_Search_Engine_Using_90_nm_CMOSMTJ-Hybrid_Logic-in-Memory_Architecture.